HOME < Technology < Load Balance

1. Introduction

Load balance will balance and distribute tasks to multiple operating units such as FTP servers, Web servers, enterprise core application servers, in order to coordinate the tasks.

Load balance provides a transparent and effective method to extend the bandwidth of servers and network devices, enhance network data processing capabilities, increase throughput and improve network availability and flexibility.

The main functions of load balance include:

1) To spread the processing of tasks to different processes, in order to reduce the load of single process, so as to achieve the purpose of processing capacity expansion.

2) To improve fault tolerance. As we know it is common that the service is unavailable due to machine downtime or abnormal processes. In a load balancing system, multiple server processes provide the same service. When one process is not available, tasks will be dispatched to other available processes by the load balance to achieve high availability.

2. When to use load balance

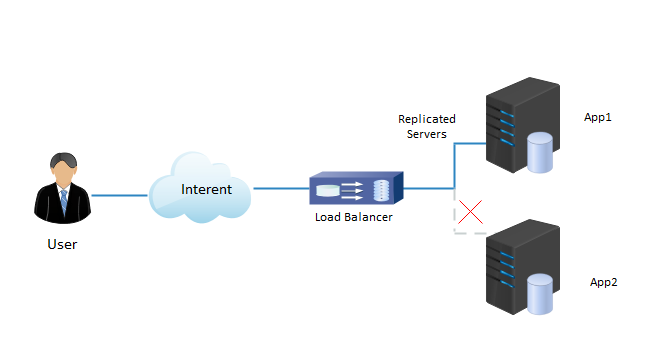

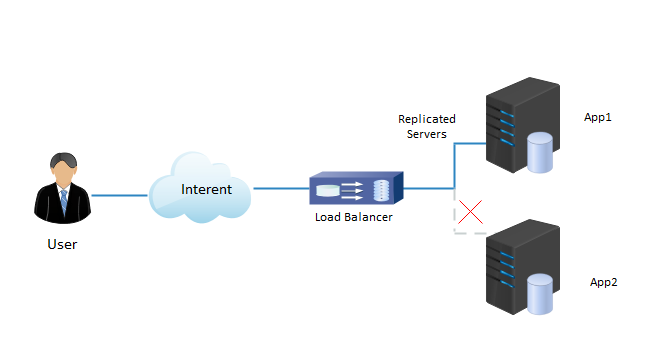

A web architecture without load balancing as the following.

In this example, the user connects directly to the web server as yourdomain.com. If this single web server goes down, the user won’t be able to access the website any more. In addition, if many users try to access the server simultaneously and it is unable to handle the load, they may experience the slow load or be unable to connect at all.

If add one load balancer and one additional web server on the backend, it will reduce the single point of failure. Commonly, all of the backend servers will supply the same content so that users receive consistent content whichever server responds.

3. Load balance algorithms

The load balance algorithm is used determines which of the healthy servers on the backend will be chosen. The commonly used algorithms are as the following.

3.1 Least Connection

Least Connection means the load balancer will select the server with the least connections and is recommended when traffic results in longer sessions.

3.2 Round Robin

Round Robin means servers will be chosen sequentially. The load balancer will select the first server in its list for the first request, then move down the list in order, starting over at the top when it reaches the end.

3.3 Source

With the Source algorithm, the load balancer will select which server to use based on a hash of the source IP of the request, such as the visitor’s IP address. This method ensures that a particular user will consistently connect to the same server.

4. Load balance schemes

Main load balance schemes as below.

1) Even Task Distribution Scheme

2) Weighted Task Distribution Scheme

3) Sticky Session Scheme

4)Even Size Task Queue Distribution Scheme

5)Autonomous Queue Scheme

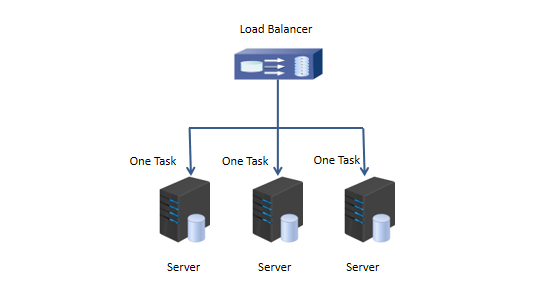

4.1 Even Task Distribution Scheme

The Even task distribution is the simplest scheme. The tasks will be distributed evenly to all server processes. When implemented, it can be used random or round robin distribution.

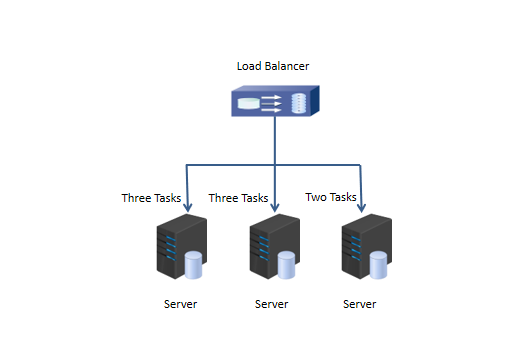

4.2 Weighted Task Distribution Scheme

When the weighted distribution scheme distributes tasks, it will give the server process a weight. The different processes will accept different numbers of tasks, and the specific quantity will be determined by the weight. For example, if the capability ratio of the three processes is 3:3:2, the weight of 3:3:2 can be given to the three processes. In other words, there will be 3 tasks distributed to the first process, 3 tasks distributed to the second process and 2 tasks distributed to the third process in every eight tasks.

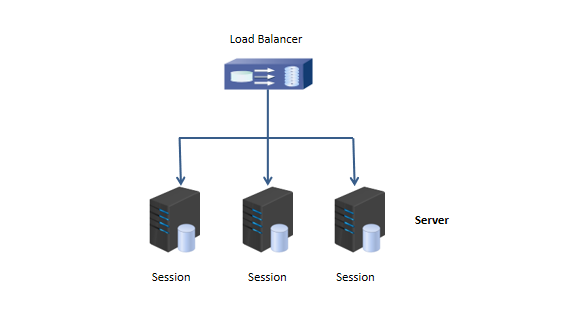

4.3 Sticky Session Scheme

All tasks (e.g. HTTP requests) belonging to the same session ( e.g. the same user) are sent to the same server. So any stored session values that might be needed by subsequent tasks (requests) are available. With sticky session load balancing, It’s not distributed out tasks to server, but rather task sessions to server.

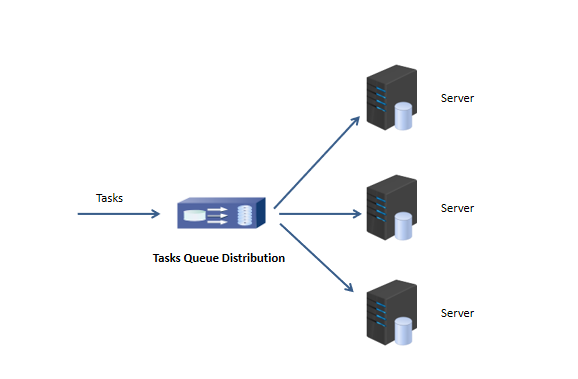

4.4 Even Size Task Queue Distribution Scheme

In even task queue distribution scheme, the load balancer creates an equal size task queue for each process, which contains tasks that the corresponding process. The task processing is fast, and its queues are reduced quickly. The load balancer will distribute more tasks to the process. Accordingly, the task processing is slow, and its queues are reduced slowly. The load balancer will distribute less tasks to the process. Therefore, the load balancer consider the capability of the processing tasks when distributed tasks.

4.5 Autonomous Que Scheme

In fact, there is no load balancer in the autonomous queue Scheme . All server processes take tasks out of the queue and execute tasks. If someone process fails, other processes will continue to execute tasks. Therefore, the task queue doesn’t need to learn about the situation of service process, and service process just needs to know its task queue and executing tasks.

The autonomous queue Scheme also needs to consider the processing capacity of the process. The faster the process processes tasks, the faster the tasks are taken out from the queue.

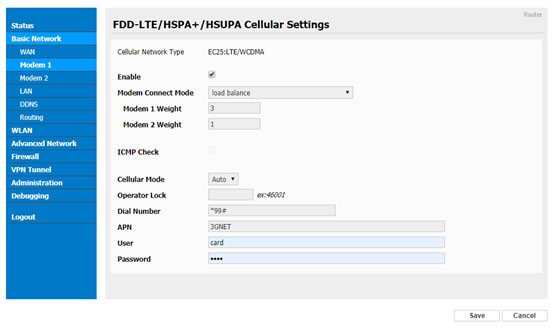

5. Load Balance in WL-R520 4G Router

WL-R520 can be built-in two 4G modems to extend the bandwidth and back-up betweentwo SIM cards, which load balance function is based weighted task distribution scheme. WL-R520 will distribute 4G traffic as defined weights to servers.

--The end--